Move Over, The v0 AI Agent is Taking The Wheel!: Death of the v0-1.5-sm model, squashing the free tier and the cost of autonomy

I want to echo the frustration I’ve seen regarding the forced migration to the AI Agent mode. I know this may seem like exposition, but I wanted to share my experience in case it helps others, as the situation wasn’t clear to me until after the fact.

But first, I’ve loved v0 in the early stages. The pricing models, as they evolved, seemed to be tracking well with output produced from the ai copilot experience. Even with the sm,md,lg model breakdown, the pricing seemed to align. I could invoke sm model prompts and tweak designs to a satisfying end result and it felt like I was truly working collaboratively with the AI. I was able to choose the amount of assistance needed, and the cost seemed to be compatible.

Jump to the post AI Agent experience and it feels like the dynamic has flipped. In the below example. I asked for the div background color for these badges to be modified. A fairly simple request that in the past would cost ~.05 credits, but for the AI Agent cost .39 credits an increase of 680% and nearly all of that cost (.38 credits) are input credits. Meaning a credit pool that used to allow for extensive collaboration now can disappear in just a few prompts and the $5.00 free credit plan So whats going on?

Gone is the cost effective sm model and the ability to manually select different models (ie. manage your prompt cost). With each prompt the AI agent model can choose to plan, research, build, and debug. Each of these sub actions, as well as any follow up prompts the Agent invokes on it’s own, draws from your [input] credits and is performed unchecked by the user. From an accuracy and efficiency perspective, I’ve encountered more looping conversation, particularly around debugging where the AI ultimately couldn’t fix the problem and ends up invoking extra steps to undo what it had just tried or will undo code changes that I have manually committed (at my cost). I also feel like the collaborative experience has suffered because my focus has shifted to prioritizing bulk step prompts that I’ve tested in other AI services in an attempt to maximize individual prompt payoff and reduce repeat debugging.

TRDL: In essences my experience with the v0 AI Agent has meant, increased cost, increased unpredictability for both prompt cost and output, loss of control and deceased collaborative intent. All of which has lead to increased frustration in my cost to output experience perception

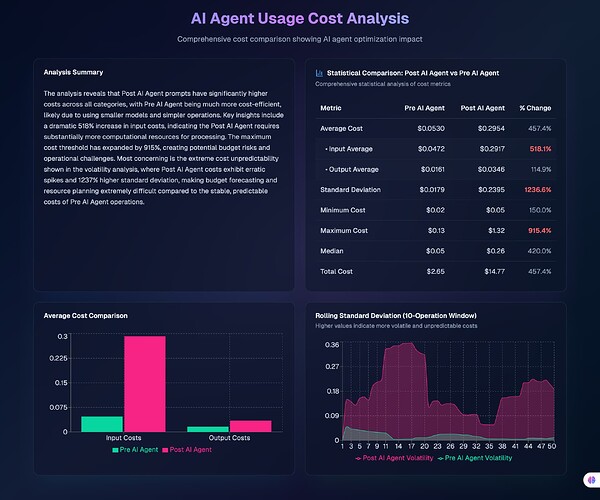

So far I’ve rattled on about my experience, my own subjective observations, so lets take a look from another perspective. Here’s some numbers, this was alarming to realize. I hope that something will change.