Due to your issue and the use of large models, I received nothing despite paying a lot of money for a single response.

Please fix your issues.

Same issue Dear V0 team FIX YOUR ISSUES

FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES FIX YOUR ISSUES

Requests will sometimes fail, that is the nature of the internet

To get best results after an incomplete generation or a big error, go to the previous version and fork the chat so it has no memory of the mistakes

Breaking your prompt into smaller tasks will also improve quality, since it can focus exclusively on one feature and if something goes wrong between features 3/4, you’ve had versions as checkpoints between them to iterate on it

- record voice to audio file

- upload audio files to jingles

- display a list of jingles from DB

- allow user to play jingles

- allow user to schedule jingles to play at certain times

If you’re unhappy with v0 and would like a refund, you can request one at https://vercel.com/help

The internet connection was excellent — I verified it before submitting. All AI tools, including ChatGPT, Claude, and others, worked perfectly, and even 6K YouTube videos streamed without delay. So this is clearly not a network issue.

The problem is with the software itself. If the generation fails, it should not consume tokens. It’s understandable that requests can fail occasionally — that’s part of how the internet works — but charging for a failed or broken response isn’t acceptable.

Also, the large model often thinks for 20 seconds and returns an empty response or empty code blocks, which happens repeatedly and wastes both time and tokens. This should not happen, especially when users are paying for access.

For reference, the md model works fine and does not exhibit these issues.

Regardless, this behavior is not acceptable in a production-quality tool, and when failures happen, users should not be charged.

I have reproduced the issue.

-

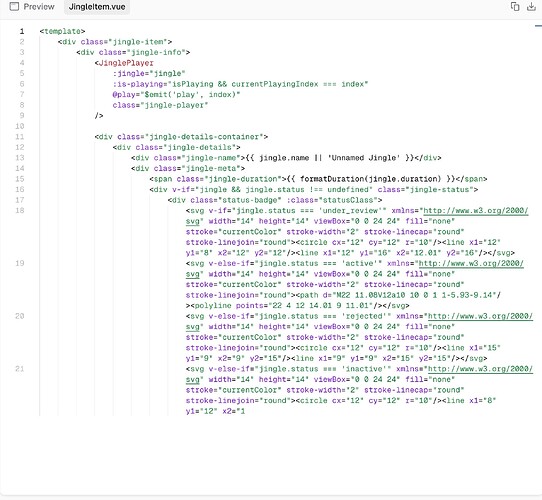

Requesting any code results in the screen shown in Screenshot 1:

-

Click the icon on the right to collapse the file list.

-

Click on the first item in the list (there’s always only one item).

-

You will see this:

The file list opens properly if you do not click the version block and instead click the code switch button:

In that case, it works correctly.

However, if you click the version block to view the generated files, it shows a single file with no code: