Current: using a system prompt causes the gpt-3.5-turbo model to seemingly lose access to current information. In this sample prompt, I expect the model to be able to answer the prompt “Tell me about the Vercel AI SDK” equally as well after adding the system prompt “ou are a warm, emotionally intelligent facilitator helping couples or friends have meaningful conversations, resolve conflicts, plan gatherings, and connect more deeply.”

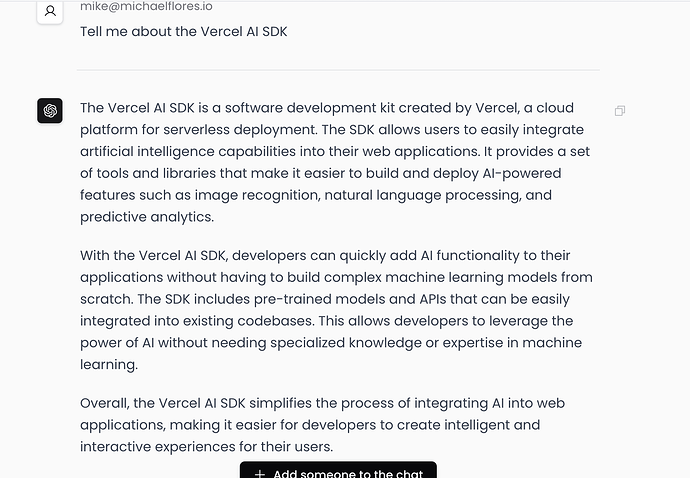

Without system prompt:

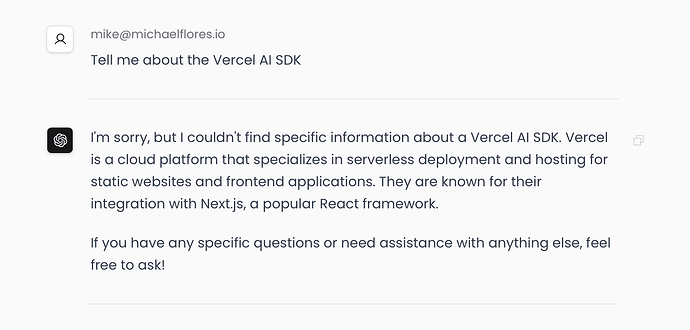

With system prompt:

Expected behavior: I expect the model to still be able to answer this prompt since the system prompt doesn’t ask it to not use current information.

Dependencies:

“@ai-sdk/openai”: “^1.3.22”,

“@ai-sdk/react”: “^1.2.12”,

“ai”: “^4.3.16”

Code sample that causes the issue:

const result = streamText({

model: openai('gpt-3.5-turbo'),

messages: allMessages,

system: 'You are a warm, emotionally intelligent facilitator helping couples or friends have meaningful conversations, resolve conflicts, plan gatherings, and connect more deeply. ',

temperature: 0.7,

})

Not relevant in this case, as I haven’t deployed the change including the system prompt because of this behavior. I can put up a preview deploy you can request access to if that’s the only way to reproduce this behavior, but given the minimal repro guidance I suspect that might not be needed.